How We Improved Multimodal Embeddings

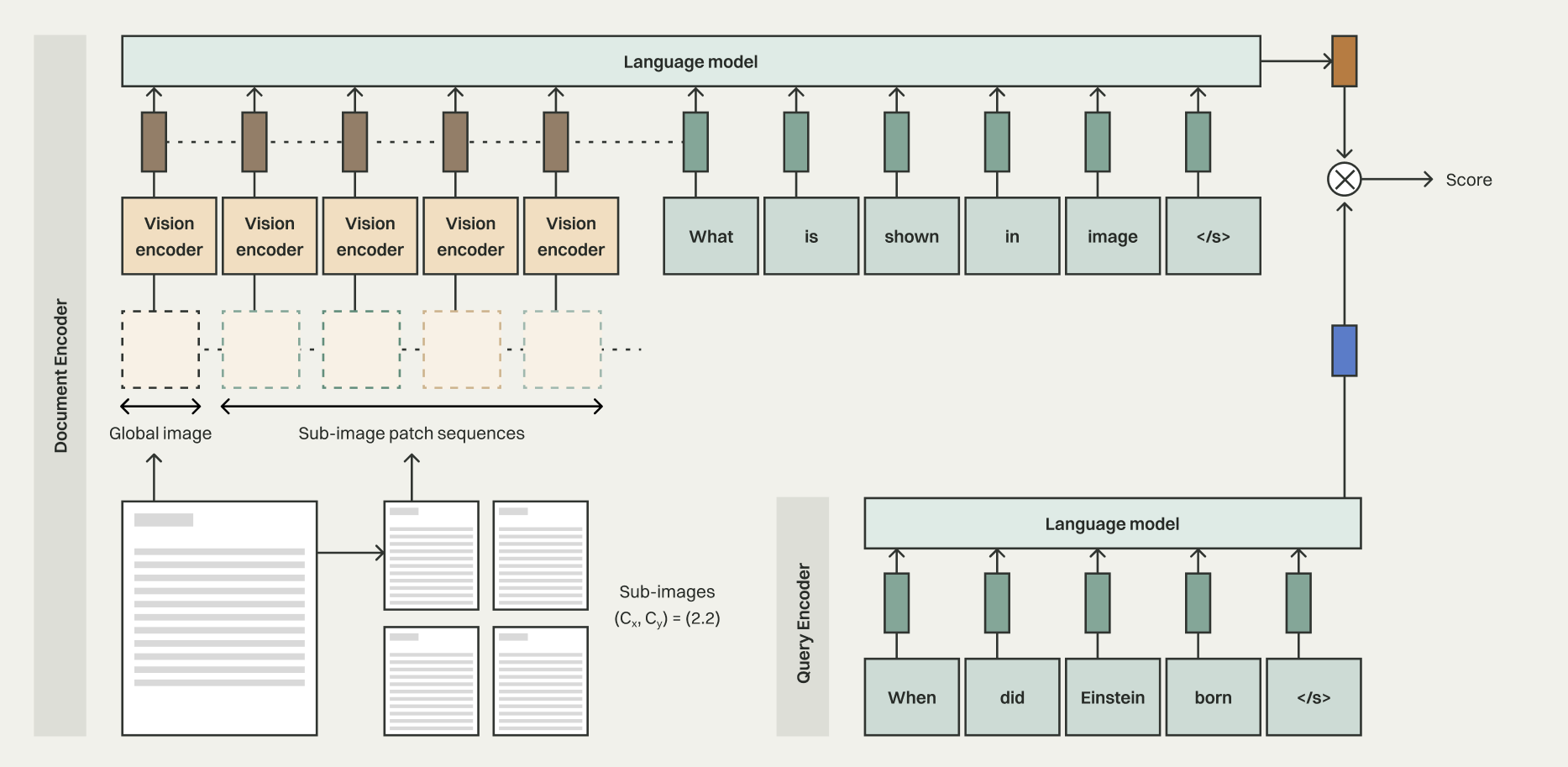

Building on these advances, we applied our learnings from training high-performance text embeddings to create even better multimodal embeddings. Starting with Qwen2.5-VL 3B Instruct as our baseline, we implemented several key improvements:

1. Sampling From the Same Source

We discovered that naive sampling across dataset sources allows models to learn shortcuts rather than semantic relationships. By forcing sampling from the same source, we create harder in-batch negatives that prevent the model from "cheating" and improve its understanding of content relationships.

Result: +2.9 point improvement on Vidore-v2 NDCG@5

2. Hard Negative Mining

We trained an initial dense model on the ColPali training dataset and VDR Multilingual Train Dataset, then used it to retrieve the top-k nearest neighbors for each query.

Additionally, we reduced false negatives using positive-aware hard negative mining, a technique first introduced in NV-Retriever.

Results:

- 1 Hard Negative: +3.5 points

- 4 Hard Negatives: +4.7 points

- 6 Hard Negatives: +5.2 points

Integration with Real World RAG Workflows

VLMs like Nomic Embed Multimodal simplify how RAG systems handle documents with rich visual content. Documents with with equations, diagrams, charts, and tables provide essential context alongside the text.

Technical documentation presents similar challenges - code blocks, flowcharts, and screenshots need to be understood together with their surrounding text. The same applies to product catalogs with specifications and images, or financial reports containing charts and numerical data.

By embedding visual and textual content together, retrieval becomes more accurate and integrations into real systems become much easier to implement and experiment with. Removing preprocessing steps often makes indexing faster and reduces complexity, and the single API for both images and text keeps implementations straightforward.

Conclusion

Nomic Embed Multimodal offers state-of-the-art performance while substantially simplifying the retrieval pipeline. As part of the broader Nomic Embed Ecosystem, this technology demonstrates our commitment to pushing the boundaries of embedding capabilities. To read more about our complete ecosystem, you can learn more on our detailed blog post.