Training Nomic Embed Vision

To address this shortcoming, we trained Nomic Embed Vision, a vision encoder that is compatible with Nomic Embed Text, our existing long-context text encoder. Training Nomic Embed Vision requires aligning a vision encoder with an existing text encoder without destroying the downstream performance of the text encoder.

We iterated on several approaches to solving this challenge. In each approach, we initialized the text encoder with Nomic Embed Text and the vision encoder with a pretrained ViT. We experimented with:

- Training the vision and text encoders on image-text pairs

- Training the vision and text encoders on image-text pairs and text-only data

- Three Towers training with image-text pairs only

- Three Towers training with image-text pairs and text-only data

- Locked-Image Text Tuning (LiT) training with a frozen vision encoder

- Locked Text Image Tuning, training with a frozen text encoder

Ultimately, we found that freezing the text encoder and training the vision encoder on image-text pairs only yielded the best results and provided the added bonus of backward compatibility with Nomic Embed Text embeddings.

We trained a ViT B/16 on DFN-2B image-text pairs for 3 full epochs for a total of ~4.5B image-text pairs. Our crawled subset ended up being ~1.5 billion image-text pairs.

We initialized the vision encoder with Eva02 MIM ViT B/16 and the text encoder with Nomic Embed Text. We trained on 16 H100 GPUs, with a global batch size of 65,536, a peak learning rate of 1.0e-3, and a cosine learning rate schedule with 2000 warmup steps.

For more details on the training hyperparameters and to replicate our model, please see the contrastors repository, or our forthcoming tech report.

Nomic Embed Vision was trained on Digital Ocean compute with early experiments on Lambda Labs compute clusters.

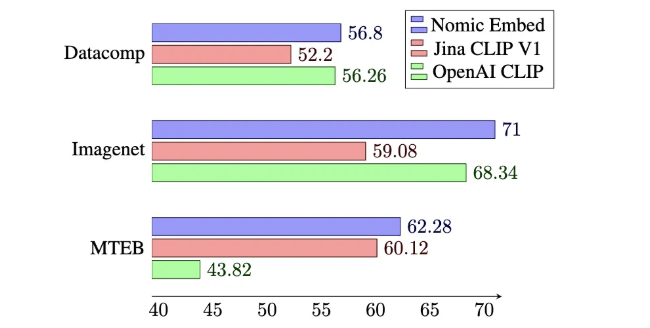

Evaluating Multimodal Embeddings

The original CLIP paper evaluated the multimodal model across 27 datasets including Imagenet zero-shot accuracy. Since then, progress on multimodal evaluation progressed with the introduction of the Datacomp benchmark. Much like how MTEB is a collection of tasks used to evaluate text embeddings, Datacomp is a collection of 38 image classification and retrieval tasks used to evaluate multimodal embeddings. Following Datacomp, we evaluated Nomic Embed Vision on the 38 downstream tasks, and found that Nomic Embed Vision outperforms previous models including OpenAI CLIP ViT B/16 and Jina CLIP v1.

Versioning the Nomic Latent Space

We have released two versions of Nomic Embed Vision, v1 and v1.5, which are compatible with Nomic Embed Text v1 and v1.5, respectively. All Nomic Embed models with the same version have compatible latent spaces and can be used for multimodal tasks.

Using Nomic Embed Vision

Model License

We are releasing Nomic-Embed-Vision under a CC-BY-NC-4.0 license. This will enable researchers and hackers to continue experimenting with our models, as well as enable Nomic to continue releasing great models in the future.

Nomic-Embed-Vision is now Apache 2.0 licensed!

For inquiries regarding production use, please reach out to sales@nomic.ai.

Nomic Embed on AWS Marketplace

In addition to using our hosted inference API, you can purchase dedicated inference endpoints on the AWS Marketplace. Please contact sales@nomic.ai with any questions.

Nomic Embed API

Nomic Embed can be used through our production-ready Nomic Embedding API.

You can access the API via HTTP and your Nomic API Key:

curl -X POST \

-H "Authorization: Bearer $NOMIC_API_KEY" \

-H "Content-Type: multipart/form-data" \

-F "model=nomic-embed-vision-v1.5" \

-F "images=@<path to image>" \

https://api-atlas.nomic.ai/v1/embedding/image

Additionally, you can embed via static URLs!

curl -X POST \

-H "Authorization: Bearer $NOMIC_API_KEY" \

-d "model=nomic-embed-vision-v1.5" \

-d "urls=https://static.nomic.ai/secret-model.png" \

-d "urls=https://static.nomic.ai/secret-model.png" \

https://api-atlas.nomic.ai/v1/embedding/image

In the official Nomic Python Client after you pip install nomic, embedding images is as simple as:

from nomic import embed

import numpy as np

output = embed.image(

images=[

"image_path_1.jpeg",

"image_path_2.png",

],

model='nomic-embed-vision-v1.5',

)

print(output['usage'])

embeddings = np.array(output['embeddings'])

print(embeddings.shape)

“Out beyond our ideas of tasks and modalities there is a latent space. I’ll meet you there."

Rumi (probably)